Smooth movement in Unity

About Update, FixedUpdate and Rigidbody2D.Table of Contents

Intro

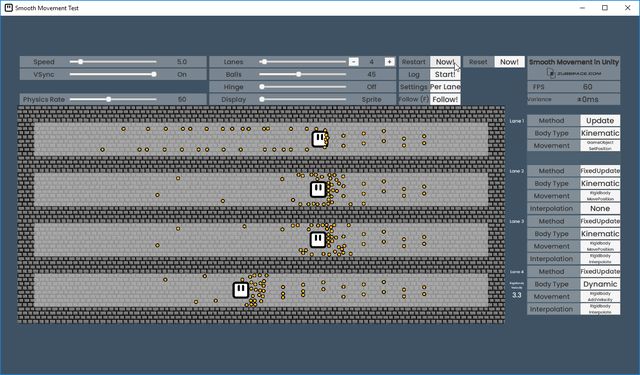

Let's take a look at the different ways you can move GameObjects in a Unity 2D game. I will discuss Update, FixedUpdate, rigidbody interpolation and other topics and you can try and compare different settings directly in the browser with a small test application. The goal is to move the in-game character of the player as smooth as possible.

Part 1 looks at the the basics, covering V-Sync, the difference between Update and FixedUpdate and the Time class in Unity.

Part 2 dives into kinematic and dynamic Rigidbody2D, interpolation and some of the problems it causes.

Part 3 will will show you a way, how to properly move Rigidbody2D in Update by disabling Physics2D.autoSimulation in combination with Physics2D.Simulate(), which avoids some of the problems of the previous parts.

TL;DR

- First decide if your game requires a kinematic or dynamic rigidbody for the character.

- A dynamic rigidbody reacts to collisions with other objects and cannot just pass through them. Use this if realistic physics are required. Move around with AddForce() or AddImpulse() in FixedUpdate.

- A kinematic rigidbody can be moved around freely according to your rules. Use this for maximum flexibility. Move around by setting Rigidbody2D.velocity or calling MovePosition() in FixedUpdate.

- Rigidbody interpolation can smooth out motion remarkably well for both types mentioned. But the character on the screen will lag behind by a physic frame.

- By turning off Physics2D.autoSimulation, you can manually force a physic step by calling Physics2D.Simulate(). This is a powerful way to use Rigidbody2D methods in Update instead of FixedUpdate without the need for rigidbody interpolation. Additionally you can process all input without any delay.

- Rigidbody2D.MovePosition() temporarily overrides Rigidbody2D.velocity and disables linear drag. Take this into consideration while you decide, which one to use.

- Keep V-Sync on in Unity, which is the default state. Otherwise unstable frames-per-second can lead to stuttering motion.

- Avoid Character accelleration and deceleration. It takes time to get used to and many players dislike it.

- Move cameras in LateUpdate.

Smooth movement test application

There are many different ways to move a character in a Unity game. In order to analyze the behavior, I wrote an application in which you can try and compare different approaches.

You can run the WebGL release right away in your browser. Alternatively you can download the appropriate release. You're invited to play around with it before reading the rest of the article:

→ WebGL Release (Runs in your browser)

→ Windows Release (Download, 18MB)

→ Linux Release (Download, 18MB)

→ Source Code on Github

Hint: The performance of the WebGL Release depends on your hardware and browser. If you run into performance issues, try a desktop release.

Why good character controllers matter

Now that Ludum Dare 44 is over, I have time to devote myself back to other things. I am satisfied with the outcome of the event and am grateful for the feedback from the other developers. A few critical words about the movement behavior of my platformer Life-Cycle prompted me to write this article:

Player control feels very slippery, and sometimes the level/camera design is unfair in that you can't see ahead, where you might die.

gwinnell

There's actually quite a bit of game here, which is great for a jam game. One thing that I kept experiencing is some bounding box issues with the spikes. Like I would come back and my body would be impaled in the middle of a bouncy pad instead of spikes.

SilvervaleGames

A nice platformer, had a bit of fun with it. As someone else said, the character is a bit hard to control with his speed, so, I think I know what what you might be going for, but acceleration/deceleration doesn't work very well in any platformer. Overall, good job!

kuro

Controls are clean! Feels good to play, and with a bit of tweaking you could probably make it even better!

DaniDev

The code, which is responsible for the movement of the player, is called a character controller. Because the character usually stays at the center of player vision, irregularities stand out a lot. Inputs of the player should lead to a direct response and any kind of stuttering should be avoided at all costs. Here's a short list of the most important points to consider, while creating a character controller:

- The character must not stutter while moving.

- The character should interact with physic-objects in a correct way.

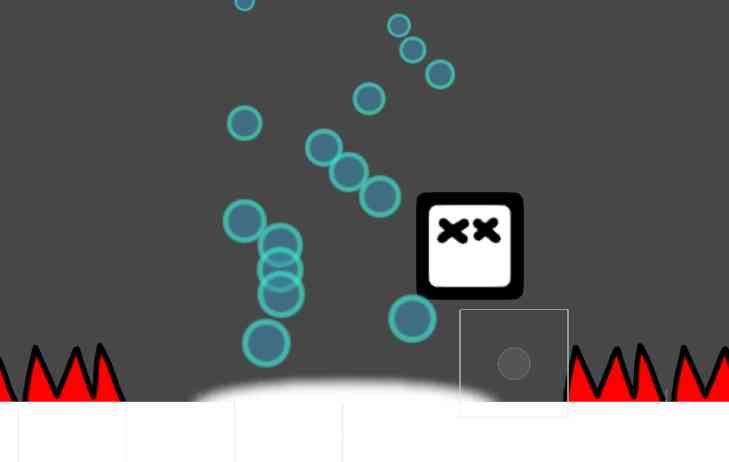

- The physical bounding box, which is responsible for collisions, and the character sprite must be in the same place.

- The effective movement of the character, triggered by input, must not be delayed.

- Accelleration and decelleration in response to input from the player can feel slippery. Avoid it.

- The camera may not lag behind the character.

While creating Life Cycle I violated all those rules due to sloppy errors! There was a slight constant stuttering of the character sprite because I interpolated the character myself incorrectly. But there was also a bounding box issue which can be seen here:

This issue is a common problem in Unity regarding rigidbody interpolation and FixedUpdate. You can observe the difference of the bad and improved movement code directly in Life Cycle by turning the Improved Character Movement option on or off on the Start Screen. I added the option after Ludum Dare was over, so the comments above relate to the switched off state. In the improved version I transferred the movement code from FixedUpdate to Update with manual physic synchronization, removed character accelleration and ditched camera interpolation. In some games measures like this are necessary, but do not change your code just yet! There are upsides and downsides to all approaches.

I'm a bit surprised that there were only a few comments addressing the erratic movement. The most simple explanation is that our perception differs a lot. Certain people react to small amounts of delay and stutter while others don't notice it at all. It is also possible to hide those issues in the character controller by using game effects or the game environment. In my case, I think the initial character acceleration disguised the errors to some extent. Last but not least, the hardware of the player is also an important factor. In any case, if someone reports that the movement could be improved, it must definitely be taken seriously.

Part 1: The basics

About V-Sync

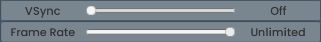

Your screen probably refreshes about 60 times per second. Unity games usually have V-Sync (Vertical Synchronization) enabled. The result is, that after each call to Update the game will wait for the screen to refresh before the next Update cycle starts and you get a frame-per-second (FPS) amount which corresponds with the screen refresh rate. The current FPS is displayed in the upper right corner of the test application. Below is a variance indicator. The variance displays the difference between the duration of the fastest and slowest Update cycle of the last half second. With V-Sync on, the variance will be small and stable.

Now let's try to disable V-Sync. Move the V-Sync slider to the left. A new slider appears called "Frame Rate". Increase the Frame Rate to the maximum value which will display "Unlimited".

Now Unity calls Update as often as possible without paying attention to the screen refresh rate. In my case, the FPS amount starts to oscillate and the variance increases. The character also starts to stutter on my screen. Just a tiny bit, but still visible if you compare it to the movement before.

The stutter is the result of the unstable Time.deltaTime value which is used inside of Update to calculate the velocity used up to the next update cycle. Because deltaTime always contains the time since the previous Update cycle, it will many times contain a value which will not reflect the time to the next Update cycle. The velocity will be slightly wrong, making the character move a bit too fast or too slow.

There can also be visible screen tearing without V-Sync. This happens, because the game engine draws the sceen to an output buffer first, which will be used during the screen refresh. If the output buffer data changes while a screen refresh occurs, the upper half of the screen will still display the previous buffer data while the lower half already uses the updated buffer data. V-Sync makes sure, that the update of the buffer and screen refresh never happen at the same time.

Therefore I advise you to always enable V-Sync. There's one exception: It could happen, that the FPS of the game cannot reach the screen refresh rate. You can try to simulate this in the test app by increasing the "Lane Count" and "Ball Count" to a high number. Unless you have top-of-the-line hardware, it will probably struggle and the FPS will go down. In such cases you could try to disable V-Sync and reduce the Frame Rate slider to a lower number to reach a stable FPS. But I believe most of the time it would be better to keep V-Sync on and instead reduce the resolution, in-game texture quality or the amount of effects.

About Update and FixedUpdate

If you came this far you probably already heard about Update and FixedUpdate. Basically Update runs before each rendered frame and FixedUpdate runs before each physic simulation step.

private Vector3 _velocity = new Vector3(1, 0, 0);

void Update()

{

// Move the GameObject with constant velocity.

transform.position = _velocity * Time.deltaTime;

}After each call to Update the scene will be rendered to the output buffer. To reach a fluent frame rate Update gets called as often as possible or, if V-Sync is enabled, as soon as allowed. Time.deltaTime inside of Update may vary. You need to take this into account, if you want to move a GameObject with a constant velocity. For example, if you set transform.position directly, don't forget to multiply the velocity with Time.deltaTime before you add the result to transform.position. That way the movement will be as smooth as possible and the velocity will be the same, regardless if you have a high, low or varying framerate. This is the default setting in the test application and you should compare all other types of movement to this baseline.

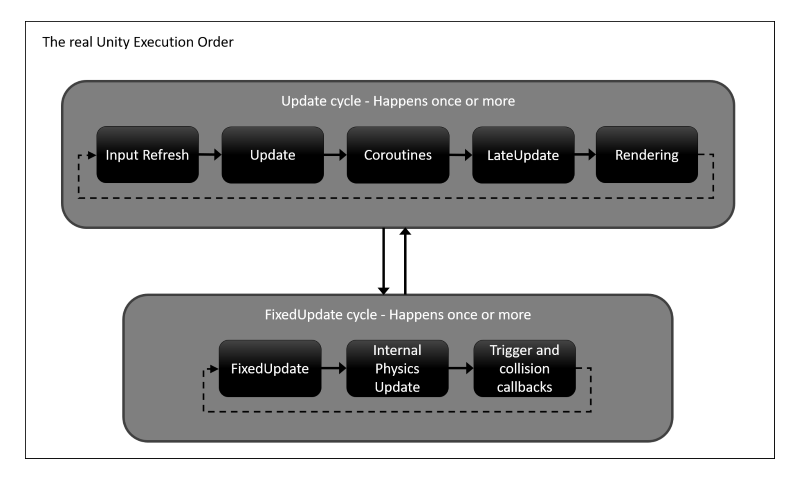

FixedUpdate on the other hand runs in a fixed time-step. Time.deltaTime inside of FixedUpdate will always return the same value. The default is 0.02s, corresponding to 50 physic steps per second. You can change the value in the Time-Settings of your Unity project or by setting Time.fixedDeltaTime directly. After each FixedUpdate cycle Unity will step the physic simulation forward for all objects. Rigidbody positions will be updated and collision and trigger callbacks are called. The Unity docs give more insight into the order of execution for event functions. But the chart is a bit misleading. Here is a better one:

All Update methods in your scripts will be called one after another and then all FixedUpdate methods. Those two cycles always run sequentially. Usually each Update cycle is followed by a single FixedUpdate cycle. But there are two notable exceptions.

Quite often there can be multiple Update cycles between single FixedUpdate cycles. This happens if the frame rate of the game is faster than the physics rate. This is a good sign. It means your game has more than enough time to render a frame. You can increase the likelyhood of this state by increasing the frame rate, for example by disabling V-Sync, or by reducing the physics rate.

But on the other hand there can be times where multiple FixedUpdate cycles can follow each other. Either an Update cycle was too long or the physic simulation is trying hard to keep up with the requested physic rate. This is bad and leads to stuttering. Not even rigidbody interpolation can help you there.

You can observe those different behaviors in the test application. Reset the options and add a second lane with the FixedUpdate Option. Now move around the physic rate. If you lower the physic rate, there will be more Update than FixedUpdate cycles. If you raise the physic rate to the same level as the frame rate, the motion on the second lane should be quite smooth. Now slowly raise the physic rate to a higher level than the frame rate. The motion on the second lane starts to stutter. The reason is that multiple FixedUpdate calls now follow each other, but the frame only gets rendered after an Update call. A varying amount of FixedUpdate calls in-between Update leads to stuttering.

Other tidbits of wisdom:

The default physic step interval is questionable

Let me just quote MelvMay working at Unity, who gave me feedback on this blog post:

Also, the default fixed-update time of 50hz is bonkers. I am not sure who came up with that originally but it's nonsense. It should be set to 60hz which would mean less likelyhood of the fixed-update time-base being out-of-sync with the game-time i.e. fixed=update on a 1:1.

MelvMay

You can do that by setting Time.fixedDeltaTime = 1f / 60f;

FixedUpdate is necessary for a stable physic simulation

By running physics in a fixed time step, independently of the frame rate, rigidbody velocity, acceleration and collisions are handled exactly the same for low and high frame rates. No fixed time step will ever be skipped, even when the frame rate drops to a crawl. In that case the physic engine may run slower, but that won't have any effect on the result of physical interactions.

Physics will actually progress right after FixedUpdate

If you look closely at the order of execution for event functions you will find the "Internal Physics Update" always follows a FixedUpdate cycle. "Internal Physics Update" will actually move the objects forward and that's the place where collisions are registered and resolved. Consequently, in case you would call Rigidbody2D.MovePosition() multiple times for the same object, only the last call will have a real effect. Rigidbody2D.AddForce() is an exception though, because the forces will be added together.

You should move rigidbodies in FixedUpdate

This is the common viewpoint of the community and a consequence of the previous two points. The physic simulation in Unity does not care about Update cycles. You cannot anticipate how many Update and FixedUpdate cycles will run successively, therefore you should move rigidbodies once, right before the physic simulation step, in FixedUpdate. Otherwise the behaviour can lead to unintended consequences which may be confusing or even game braking.

(There are some advantages to moving rigidbodies solely in Update, but I will come to that in Part 3)

The input state only refreshes before an Update cycle

Actually you can use the Input class within FixedUpdate, but be aware that this only works as expected for continuous events like Input.GetAxis() or Input.GetButton(). Input.GetButtonDown() on the other hand will return true up to the next Update cycle. If there is no FixedUpdate call in-between, you would lose a Down event. If there were multiple FixedUpdate calls in-between, you would maybe handle the Down event more than once. Ouch!

What you should do instead is to register those Up and Down events in Update and forward them to FixedUpdate in a FIFO-Queue. That way you can make sure, that you process each event exactly once.

Move cameras in LateUpdate

If you have many objects with Update callbacks, they will be called in a random order (except if you adjust the script execution order, but let's ignore this). To follow an object with a camera, set transform.position of the camera in LateUpdate. LateUpdate gets called right before rendering and you can be sure, that the position of the followed object won't change again for the current frame.

// Part of a follow camera script:

public Transform FollowTarget { get; set; }

// LateUpdate will be called after all Update calls.

void LateUpdate()

{

if (FollowTarget != null)

{

// Move the camera to the (maybe interpolated) position.

transform.position = FollowTarget.transform.position;

}

}Also assign transform.position instead of Rigidbody2D.position of the followed object to transform.position of the camera. transform.position will contain the interpolated rigidbody position if interpolation is enabled. Rigidbody2D.position won't.

About Time.time and Time.deltaTime

First and foremost: You only ever need to use Time.time and Time.deltaTime in Update and FixedUpdate. In FixedUpdate the values will be equal to Time.fixedTime and Time.fixedDeltaTime.

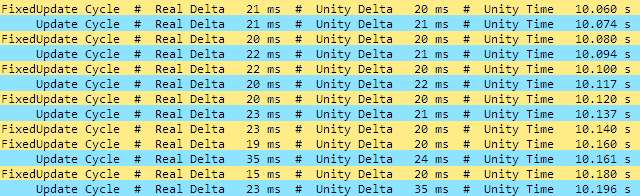

To analyze the Update cycle and Time values enable the log in the test app. In the webgl version you can observe individual Update and FixedUpdate cycles, their Time.deltaTime values and deltaTime values gathered through the Stopwatch class in the browser console. In the offline Version a file called "SmoothMovement.log" will be created with the same information. Here's an excerpt:

The first column "Real Delta" contains the time in milliseconds since the last cycle measured by a System.Diagnostics.Stopwatch instance. There are two seperate Stopwatches for Update and FixedUpdate. The second column "Unity Delta" contains the value returned by Time.deltaTime in milliseconds. The last column "Unity Time" contains Time.time in seconds.

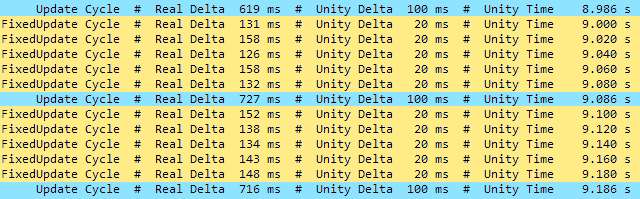

There's a lot of information somewhat hidden in this log. One thing, which is easy to observe, is that Time.time is always increasing, shown in the last column of the table. Moreover in FixedUpdate Time.time is increasing monotonously according to a constant Time.deltaTime which equals Time.fixedDeltaTime. This is always the case, even if your game is under heavy load. Here is an extreme example, where characters are moved with rigidbody.MovePosition on 100 lanes with the ball count increased:

When you increase the load on the Unity engine by adding too much stuff to display, the duration of each cycle will increase. FixedUpdate will be called more often because Unity tries to run the physic updates according to Time.fixedDeltaTime. After a FixedUpdate cycle there won't be any time left for an Update cycle, but because of Time.maximumDeltaTime with a default of 100ms, also adjustable in the project settings, there will still be an Update call after 5 FixedUpdate cycles which together advance Time.time by 100ms. The game starts to stutter extremely, but that's still better than no screen update at all.

Other tidbits of wisdom:

Time.time does not reflect the real time

If you need to move things in real time, use something else like Time.realtimeSinceStartup or System.Stopwatch.

The sequence of Update and FixedUpdate calls is unpredictable

I did not find a reliably way to tell if the next cycle will be an Update or FixedUpdate call. The easiest thing is to just use Time.time and Time.deltaTime and simply assume that the frame rate is fine.

Part 2: Let's move!

About Rigidbody2D

The physics engine needs to handle stationary and moving objects. Both types need a collider component, but the Rigidbody2D component indicates to the physic engine that the object will move. You can move and rotate an object without a rigidbody, but the physic engine will probably need to fulfill some unnecessary work. Therefore if a object moves, add a Rigidbody2D.

To make matters more complicated, Rigidbody2D can run in 2 different modes: Kinematic or Dynamic.

A Kinematic rigidbody will not move, unless some code sets transform.position, Rigidbody2D.position, Rigidbody2D.velocity or calls Rigidbody2D.MovePosition(). Each step the physic engine moves the rigidbody to its target position, regardless of external forces or gravity. All collisions are ignored and kinematic rigidbodies can overlap static objects or each other. This type is most commonly used to move player or enemy characters around or to move platforms or doors.

A Dynamic rigidbody can be moved the same way like a kinematic one. Additionally Rigidbody2D.AddForce() can be used. The main difference is, that dynamic objects react to collisions with dynamic, kinematic or static objects in a realistic way. This type can also be used to move characters around, but they are particularly suitable for objects which must always collide with the environment. Think of a thrown ball or debris falling down.

Now, which one to use for a player character? I believe, that most of the time a Kinematic rigidbody should be used. The reason is, that you get full control of the movement. You can decide through code how the character responds to input or the environment. You can also change or break the rules of physics if necessary, like moving through other colliders. Jump-and-Runs are the perfect use-case for this type. Drawback: a lot of custom scripting. You need to collide with the environment yourself using raycasts or a grid (explained later) and you need to handle velocity and friction yourself. You also need to respond to collisions with other objects if required.

If your player character must always respond according to the rules of physics, a Dynamic rigidbody could be used. The player will collide with all other objects realistically through forces. For example space shooters or driving games are a prime example of this style. Drawback: motions which should intentionally not be physically correct are hard to replicate and characters can actually get completely blocked by other colliders. Consider the latter point: Should it be possible for a player to push through hordes of enemies? If yes, maybe consider a kinematic rigidbody.

Both types can be used to move characters around, but the resulting movement style and problems are different. Choose wisely.

Other tidbits of wisdom:

Set the "Static" flag to true for static objects.

Stationary objects only need a collider component for other moving objects to run into. Additionally, the static flag allows Unity to combine the meshes of multiple static objects into one, if the corresponding project setting is enabled. You can change the position of those objects, but avoid it for performance reasons.

You should interact with Rigidbody2D only in FixedUpdate.

In the section about Update and FixedUpdate I already mentioned why FixedUpdate is necessary. Unity recommends moving rigidbodies only in FixedUpdate. The result is a stable physic simulation under all conditions and rigidbodies responding predictably to movement changes in your scripts.

(Again: There are some advantages to moving rigidbodies solely in Update, but I will come to that in Part 3)

Avoid setting transform.position or Rigidbody2D.position directly.

You can set transform.position or Rigidbody2D.position directly and in some cases a sudden position change may be even required, for example to beam a character to another place, but the resulting physical interactions won't be correct. You can easily verify this in the test app if you increase the ball amount and set the movement type to either "GameObject SetPosition" or "Rigidbody SetPosition". The balls, the character collides with, are pushed away but unexpectedly stand still.

What happens is that the gameobject position is instantly beamed to the new position, possibly overlapping dynamic rigidbodies. Those rigidbodies are moved away in a simple manner without any forces.

There are small differences between setting transform.position and Rigidbody2D.position. The former will change the gameobject position directly, even in Update. The latter will be evaluated only after the next FixedUpdate cycle. Setting Rigidbody2D.position has the slight advantage, that you can enable rigidbody Interpolation.

Move kinematic rigidbodies by setting velocity or MovePosition()

You can set velocity once and the kinematic rigidbody will keep this velocity until you change it again. But you can also set velocity each time FixedUpdate() runs. If you use MovePosition(), you need to call it each time FixedUpdate() runs for a continuous movement.

Applied like this, there's no visible difference between these two methods. Both lead to correct physical responses of dynamic rigidbodies colliding with the kinematic rigidbody on it's way. No matter how far the kinematic rigidbody will travel during a frame, it will hit all bodies in-between in contrary to setting rigidbody.position directly.

The difference is a semantic one: do you prefer setting the direction of the movement or do you prefer setting the next position? In some cases it even makes sense to use them together. For example you could apply velocity in response to a held down key, but when the character would cross a boundary, use MovePosition to restrict the movement.

I also have to mention, that MovePosition() has some special properties, which I will look at after the next point.

Move dynamic rigidbodies by calling AddForce() or AddImpulse()

Dynamic rigidbodies are meant to mimic realistic physical interactions. In real life, objects are governed by physical forces. They need to speed up, slow down and collisions transfer force from one object to another. Such an accurate simulation can be achieved by using AddForce().

This is even more important when you attach other objects to the character with joints. Enable the Hinge Joint option in the test app and observe how the attached ball affects the movement of the character. Only when the character is moved by AddForce() the hinge joint will properly calculate the forces among both objects.

You can set the velocity directly or use MovePosition() on a dynamic rigidbody, but all other forces are overwritten in an instant, which is unrealistic but sometimes necessary.

The difference between AddForce() and AddImpulse() is a bit confusing at first. The value you supply to AddForce() will be the total force (in Newtons) applied to the rigidbody after one second. If you call AddForce() in FixedUpdate() for exactly 1 second with value 1 on an object with mass 1, overall its velocity will change by 1. In other words, the result is an accelleration according to the formula acceleration = force / mass derived from force = mass * acceleration. As you can see, the accelleration is independent of the number of physic steps per second. As soon as you stop calling AddForce(), the accelleration will be gone.

On the other hand, if you supply the same value to AddImpulse(), the same total force will be applied instantly! Similar example like before: If you call AddImpulse() in FixedUpdate() once with value 1 on an object with mass 1, its velocity will change by 1 immediately. Use this for explosions or situations, where an immediate transfer of force is necessary.

MovePosition() overrides velocity, but only temporarly

Many thanks to MelvMay working at Unity, who explained it to me:

[...] MovePosition temporarily sets the linear velocity required to move to the specified position over a single simulation step. It does this by storing any existing linear velocity and linear drag and assigning the internal velocity. When the simulation runs it uses this internal linear velocity to move to the position and this means it moves through the interviening space and collides with everything along the way. When the simulation step has finished it reassigns the stored velocity.

MelvMay

You can use MovePosition() for kinematic and dynamic rigidbodies. It's well suited for kinematic ones, but in combination with dynamic rigidbodies it behaves a bit special. MovePosition() overrides the current velocity of the rigidbody, moves the object to the target position within one physic step, ignoring Rigidbody2D.drag, and then it finally restores the previous velocity.

So, in combination with dynamic rigidbodies you could use MovePosition to...

a) ...make sure the character moves to the target position within one physic step,

b) ...re-position the character without drag affecting it,

c) ...re-position the character without affecting the current velocity.

One scenario where MovePosition() makes sense: You need to spawn a rigidbody at a spawn point, already occupied by another rigidbody. Now you can move away the offender with MovePosition().

Another scenario I can come up with, where MovePosition() could be useful, would be some kind of "Charge-Attack", which happens for a short duration and where the player needs to collide with everything in-between, and afterwards continues with the previous velocity.

About rigidbody interpolation, input lag and display lag

If you're using the rigidbody component according to the rules layed out in the previous sections, you will get the option to enable rigidbody interpolation or extrapolation. Interpolation works remarkably well. It smoothly interpolates the rigidbody position to the new position calcuated after a FixedUpdate call. Try to compare an object moved by setting transform.position in Update to an object moved by MovePosition in FixedUpdate with interpolation in the test app. The result is nearly identical. You can also change the Display slider to see the sprite and the rigidbody position (red rectangle) all at once. Reduce the Physic rate for the full effect.

So, this is just a visual interpolation, not a physical one. The motion definitely looks smoother, but the visual representation will also lag behind the real position of the rigidbody. This is a problem, if the player needs to accurately control the character through a deadly world filled with monsters and hazards, where each misstep is punished. The delay increases the faster the character moves.

Additionally, moving an object in FixedUpdate also leads to input lag. Because Unity samples the user input before each Update call and Updates can happen more often than FixedUpdate calls, a button press needs to wait for FixedUpdate first before you can instruct a rigidbody to move, jump or whatever. You also need to be aware, that you need to pass button KeyUp and KeyDown events from Update to FixedUpdate in a FIFO-List somehow, otherwise there's the chance that you would miss some of those events in FixedUpdate if you would use the Input class in there directly.

After careful consideration I finally decided to move the kinematic character in Life-Cycle in Update and test collisions with raycasts. If you only use Update to move rigidbodies around, the world will not fall apart (and your program won't crash), even if you just set transform.position. But there's definitely a better way with manual physic synchronization and correctly using Rigidbody2D. This completely eliminates the need for interpolation and keyboard events can be processed immediately. The game runs smoother and controlling the character feels very tight.

About kinematic rigidbody collisions

So maybe you decide to use a kinematic rigidbody to have full control over the movement. But now your character will not collide with static colliders and other kinematic rigidbodies.

Each frame you sum all velocity vectors (the horizontal and vertical velocity determined by key presses) and probably you also want to add a gravity vector. Normalize the result. Then, make sure that the maximum velocity is respected with Vector3.clamp. Now, before you set Rigidbody2D.velocity or call Rigidbody2D.MovePosition(), you need to determine how far the character is allowed to move in each major direction.

There are two ways known to me, how to figure that out: Raycasts or Grid collisions.

Raycast collisions

With raycasts you're able to get the distance from the nearest object to the ray source. That's the maximum distance your character may travel in the ray direction. Do this for all major directions and you will get 4 maximum distances. You only need to check that the player never moves farther than that in the current physic step. Tip: Use Debug.Drawline to visualize the raycasts.

But the approach comes with some caveats. If you have environment tiles, which are as big as the player, make sure, that you check at least 3 rays in each direction, otherwise the rays could possibly miss a tile which is fully aligned with the player. Also shift the ray source inward from the edge of the player, otherwise it can sometimes happen that the ray will start inside of a wall or floor, even though it should be exactly on it. It's hard to tell in the recording above, but the rays start on the inner edge of the black border around the player. This complicates the code, because consequently you need to substract that inward shift from the ray distance. Also make sure that the raycasts ignore the player collider.

Grid collisions

I actually haven't implemented this before, but the approach would use a tile grid, where you can exactly determine, which tiles a player can move on and which tiles block the player. Instead of casting rays each frame you only need to determine the tile where the player currently resides. And from there you measure the distance from the character bounding box to the next blocked tile by inspecting the grid. Sounds simple, right?

About Rigidbody 2D and 3D differences

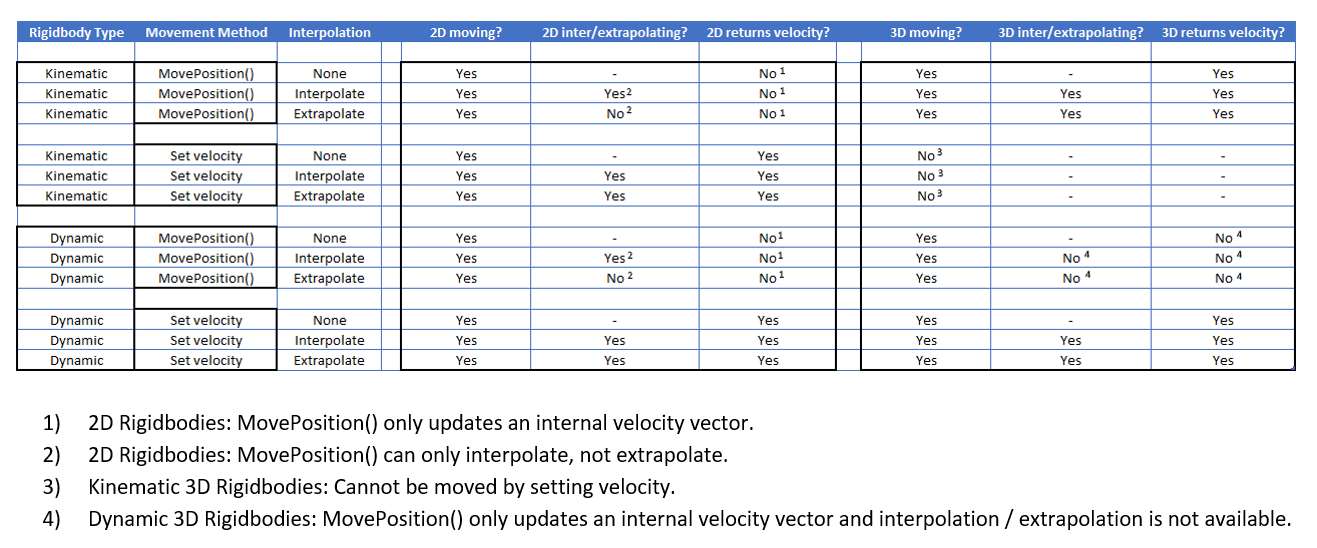

I haven't done a lot of work in 3D, but JanOtt brought it up and I thought, that I could document the major differences through testing. Here are the results without a thorough analysis:

Part 3: Physic2D.Simulate()

About the problems of FixedUpdate

Part 1 and 2 of this blog post went into detail how to use Rigidbody2D correctly. Using this traditional knowledge you should never change a Rigidbody2D outside of FixedUpdate. But the approach has major shortcomings:

-

You have to split the code of your character class into two different parts. One part handles game and input logic in Update(). The other part handles physical interactions in FixedUpdate. This is a conceptual burden and it increases the complexity of your code.

-

If you want to change a Rigidbody2D as a response to mouse clicks or button presses, you need to carefully pass those events from Update to FixedUpdate in a list or queue. If you otherwise access the Input class in FixedUpdate directly, there is a small chance, that you miss some events or handle some events twice, because there can be multiple Update cycles or FixedUpdate cycles in a row, as we have seen in Part 1.

-

The previous bullet point adds a slight delay to your input processing. Player input will have to wait for the next FixedUpdate cycle. And then the player needs to wait some more for the next Update cycle until the effect can be seen.

-

Because rigidbodies are moved in their own time step within FixedUpdate, there is a need to interpolate the position of their rendered sprites in the Update cycle. Rigidbody interpolation works quite well, but it will display the objects at a position in the past. This adds a bit of delay to any action happening in the game world. Colliders in the physic world and their respective sprites will not match, making physical interactions of the character with the environment unpredictable. The player will also perceive their input actions further delayed.

Oh dear... But don't lose your hope yet!

About manual physic updates

The magical ingredients to predictable physics with smooth movement in Unity are called Physic2D.autoSimulation and Physic2D.Simulate().

The default value of autoSimulation is true, meaning that the internal physic step will be executed automatically after the FixedUpdate cycle. If you turn this off, you are responsible for executing the internal physic step by calling Physic2D.Simulate().

This is very powerful. That way you can decide to call Rigidbody2D methods in Update and force an internal physic step after the Update cycle manually. There's no more need for Interpolation and you won't have to touch FixedUpdate ever again, if you are careful!

This is not commonly known and I first considered this approach after speaking with MelvMay from Unity in their forum. He's the guy who wrote the Torque 2D game engine and is now a physic programmer at Unity. The forum post contains a lot of in-depth knowledge about 2D physics:

For ultimate smooth motion with physics sacrificing some performance and reduced determinism, updating the physics simulation per-frame is better using Physics2D.Simulate. This'll be a lot easier when the following lands too: https://twitter.com/melvmay/status/1164591679535497219?s=20

MelvMay

As you can see, at some point the internal physic step will maybe be configurable through the settings. Thereafter you can just switch the setting to Update and you're done. Otherwise you will need to apply the code after the next part.

Shortcomings of manual physic updates

Like everything in life, this approach also comes with some drawbacks.

It ties the physic step to the framerate

You need to call Physic2D.Simulate() within an Update method which runs last and you pass it Time.deltaTime. Usually Unity uses Time.fixedDeltaTime for the internal physic update which stays constant. Time.deltaTime may vary as we have seen in the chapter about V-Sync. As MelvMay told us in the last chapter, this may reduce determinism. When the framerate starts to oscillate physic calculations can lead to unexpected results.

You need to be very careful, because players could abuse this to gain an advantage. As an example, players could try to cheat in the game by reducing the frame rate, hence making the character move farther each frame. If successful they could potentially jump through walls. However, this may be an acceptable risk for single player games without any leaderboard.

So rather think twice about that. You should also make sure, that you never pass a value to Physic2D.Simulate() which is too high.

It does not properly work with Rigidbody2D.MovePosition()

MovePosition() is a bit special. We looked at the method in the chapter about rigidbodies. If you enable manual physic updates in the test application, use MovePosition on a lane and reduce the physic rate to values below the framerate, the character will move slower compared to a lane which uses SetVelocity. But that's not what we wanted! We explicitely wanted to get rid of any dependency on FixedUpdate!

Unfortunately this seems to be the way MovePosition() works. It seems to calculate an internal velocity vector based on the position argument and Time.fixedDeltaTime even though it should consider the value passed to Physic2D.Simulate(), but I have to admit that I'm not quite sure.

An easy workaround would be implementing your own alternative to MovePosition() through an extension method:

public static class Extensions

{

/// <summary>

/// An alternative to Rigidbody2D.MovePosition() which works with

/// manual physic updates.

/// </summary>

public static void ShiftPosition(this Rigidbody2D rigidbody, Vector2 position)

{

rigidbody.velocity = (position - rigidbody.position) / Time.deltaTime;

}

}You can check out this method by selecting Custom Move for the movement type of a lane in the test application. Be aware, that this method does not fully replicate MovePosition(). For example, it does not temporarily disable linear drag.

FixedUpdate() still runs

It's a bit strange that FixedUpdate() still runs after setting Physic2D.autoSimulation to false, but that's the way it is. I'm not quite sure how other methods are affected, like collision callbacks and the like. Sorry, you need to figure this out on your own. I think it is probably a good idea to set Time.fixedDeltaTime to your default framerate, just in case..

It's not widely used

You need to take this into account, because if you use this approach and run into problems, you are probably on your own. Even moreso if you use special features of Unity I did not anticipate or packages from the app store which interact with physics.

Using manual physic updates

So you decided to use Physic2D.Simulate(), do everything in Update and live a happy life. Here's how its done:

public class ManualPhysicUpdate : MonoBehaviour

{

void Awake()

{

Physics2D.autoSimulation = false;

}

/// <summary>

/// Get's called at the end of the Update cycle.

/// Defined in project settings under "Script Execution Order".

/// </summary>

void Update()

{

float safeDeltaTime =

Time.deltaTime < Time.maximumDeltaTime ?

Time.deltaTime :

Time.maximumDeltaTime;

Physics2D.Simulate(safeDeltaTime);

}

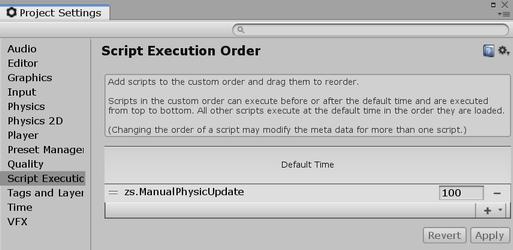

}Yes, it's as simple as that! Just make sure, that there is exactly one instance of this class. You also need to add the class to the Script execution order of your project, because it needs to run after all other Update methods were called:

Final words

Well there you go. There are many options how to move a player around. Be aware of the advantages and disadvantages of each approach and make sure, that all your requirements are met. This highly depends on type of your game, on the required movement accuracy and if you need full-fledged physical interactions.